Metascience Reforms at NIH

This newsletter covers no fewer than four exciting metascience developments, with huge potential for improving science and medicine at NIH and elsewhere.

Metascience Research Ideas at NIH

NIH recently released a notice that it is seeking research ideas on a ton of metascience issues (hat tip to Caleb Watney).

While NIH is not providing any funding for this (???), it is interested in collaborating with researchers as to data access and the like. The possible research questions include:

How can NIH improve on identifying desired outcomes and measuring impact related to its mission?

Beyond bibliometric measures, what are the indicators of scientific success?

What approaches can be used to capture successful/impactful scientific strategies and new tools and methods, and are these approaches scalable?

What measures can NIH use to capture both incremental knowledge gains and failures that ultimately contribute to scientific success?

What approaches can NIH use to measure impact of different categories of science (e.g., basic, translation, clinical) and the technology and operations used to support the science?

[several other sub-questions]

Are there methods that NIH can use to better predict and identify scientific opportunities (e.g., the emergence of gene editing technology)?

Are there approaches that could inform NIH funding decisions by measuring scientific quality, rigor, and reproducibility?

What evidence can NIH use to inform its efforts to optimize its investment in recruiting, training, and sustaining a diverse U.S. biomedical, behavioral, and social sciences research workforce?

What evidence does NIH need to improve the clinical research ecosystem? What would inform a re-envisioning of the clinical trials system to maximize quality, participant experience, accessibility, timeliness, and impact on clinical care?

What evidence could inform steps NIH can take to ensure progress on overcoming health disparities and strengthen partnerships with underserved communities and practitioners?

How should NIH assess risk in its research portfolio? What is the right amount of risk for NIH to accept as a steward of public funds?

Does the NIH funding system foster sufficient risk-taking to encourage researchers to explore high-risk research? If not, are there ways to test novel funding approaches that could be implemented at scale?

Lots of opportunities there for researchers to make a difference in informing how NIH operates, and to increase our metascience knowledge more broadly.

If you have research ideas, and don’t need extra funding, do dig into this.

The FY24 Appropriations Language

Congress passed an appropriations bill in March 2024 for NIH (it was long overdue for FY24). The bill came with a joint explanatory statement, the first paragraph of which cited back to a Senate report from 2023 that ended up being controlling because the House version didn’t make it out of committee.

Long story short, the Senate report language is binding, and NIH is now expected to engage in the following eight metascience activities over the next year or so:

Encouraging Innovation and Experimentation.--The Committee recognizes that there are many ideas for how NIH could improve its operations and funding models--such as lotteries for funding mid-range proposals, funding the person rather than the project, and more--yet there is not enough evidence to directly mandate any of these ideas. The Committee urges NIH to examine how best to create or empower a team that would engage in NIH-wide experimentation with new ideas regarding peer review, funding models, and others, so as to enhance NIH's operations and ultimately to improve biomedical progress. The Committee directs NIH to provide a report within 1 year on these efforts.

Expanding Support for Young Investigators.--NIH has been criticized for funding too many late career scientists while funding too few early career scientists with new ideas. The Committee is concerned that the average age of first-time R01 funded investigators remains 42 years old. More than twice as many R01 grants are awarded to investigators over 65 than to those under 36 years old. The Committee appreciates NIH's efforts to provide support for early-career researchers through several dedicated initiatives, including the NIH Director's New Innovator Award, Next Generation Researchers Initiative, Stephen Katz award, and the NIH Pathway to Independence Award. The Committee encourages NIH to continue supporting these important initiatives and to expand support for early career researchers by increasing the number of award recipients for these programs in future years. Finally, to better understand what is needed to advance these efforts, the Committee directs NIH to provide a report within 180 days of enactment on its full range of programs for early career scientists including the annual cost per program over the last five fiscal years and the average number of recipients per year by award. Such report shall include a ``professional judgement'' budget to estimate the additional funding needed to grow and retain the early career investigator pool, accelerate earlier research independence, and ensure the long term sustainability of the biomedical research enterprise.

Fund the Person, Not the Project.--While many labs are funded by R01-equivalent grants, the R35 mechanism arguably allows scientists more flexibility and freedom to pursue the best possible science. At present, only NIGMS uses the R35 to a significant extent (more than four times as often as the rest of NIH put together), with its Maximizing Investigators' Research Award [MIRA] program. The Committee directs NIH to convene an expert panel on expanding the R35/MIRA grant type such that is more widely used across NIH Institutes and Centers, and to report back to the Committee within 1 year on NIH's plans for expanding the R35 along with its plans for evaluating the impact on scientific progress.

Funding Replication Experiments and/or Fraud Detection.--The Committee recognizes that many biomedical research studies have turned out to be irreproducible or even outright fraudulent. The recent Reproducibility Project in Cancer Biology showed that cancer biology studies in top journals often failed to be replicable, and a prominent line of Alzheimer's studies was recently found to be based on an allegedly fraudulent study funded by NIH in the early 2000s. Given the importance of detecting both reproducibility and fraud, the Committee provides $10,000,000 to establish a program to fund replication experiments on significant lines of research, as well as attempts to proactively look for signs of academic fraud. The Committee directs NIH to brief the Committee within 180 days of enactment on the establishment, staffing and plans for this effort in fiscal years 2024 and 2025. [On this one, the House suggested $50 million and the Senate suggested $10 million, but the two bodies couldn't agree on the dollar amount.]

Improving Clinical Trials.--The clinical trial enterprise has been criticized for conducting too many clinical trials where small size may lead to the production of limited evidence relevant to clinical outcomes. The Committee directs NIH to convene an independent panel (at least 51% of whom must be non-Federal employees) in order to assess the rate at which NIH-funded clinical trials are not of sufficient size or quality to be informative. The panel should randomly sample at least 300 NIH-funded trials from each of the years between 2010 and 2020, and should make recommendations as to how to fund fewer non-informative trials in the future. The Committee directs NIH to provide a report within 1 year as to the independent panel's findings.

Near-Misses.--The Committee recognizes that in many cases, top biomedical scientists (even Nobel winners) attest that they struggled to get NIH funding for the work leading up to their major discoveries. The failure of the NIH peer review process to recognize and award groundbreaking science is separate from the issue of hypercompetition, and warrants investigation. The Committee urges NIH to fund a major, independent study of how often this phenomenon happens, the possible reasons behind it, and potential reforms that could alleviate the problem in the future.

Reducing the Administrative Burden on Researchers.--The Committee recognizes that according to a national survey by the Federal Demonstration Partnership, federally-funded researchers report spending 44 percent of their research time on bureaucracy, including the time to prepare proposals and budgets, post-grant reporting of time and effort, ethical requirements, and other compliance activities. Although NIH and other agencies tasked with reducing administrative burden conducted extensive consultations with the research community that resulted in a 2019 final report on their implementation plans, the Committee is concerned about the status of the implementation by NIH and any plans to evaluate the outcome. The Committee directs NIH to form a Board on reducing administrative burden, with at least 75 percent representation from non-Federal organizations and at least 25 percent representation from early-career researchers (including post-docs). Within 1 year of enactment, the Board is directed to provide a report that includes an evaluation of current efforts to reduce administrative burden and to provide recommendations aiming to reduce the administrative burden on researchers by 25 percent over the next 3 years. The Committee strongly encourages NIH to put recommendations into effect as soon as practicable. The Committee requests a briefing on this effort 90 days from the enactment. The report recommendations shall be made available to the public on the agency website.

Scientific Management Review Board.--The Committee recognizes that under the NIH Reform Act of 2006 (Public Law 109-482), a Scientific Management Review Board [SMRB] was created with the specific mission of reviewing the overall ``research portfolio'' of NIH, and advising on the ``use of organizational authorities,'' such as abolishing Institutes or Centers, creating new ones, and reorganizing existing structures. Yet this Board has not met or issued a report since 2015, despite the obligation to do so every 7 years. The NIH Advisory Committee to the Director [ACD] does not have the statutory authority or mandate to serve as a substitute for the SMRB, and the Committee rejects any efforts to assign the ACD to undertake these efforts. The Committee directs NIH to reconvene the SMRB within 1 year of enactment in order to fulfill its statutory duty to advise Congress, the Secretary, and the NIH Director on how best to organize biomedical research funding.

This is a more comprehensive list of metascience projects than I’ve ever seen at any federal agency. After all, imagine NIH setting up a team to engage in experimentation as to grant processes, or truly taking action to reduce administrative burden on researchers, or funding $10m+ of replication studies, or expanding efforts to fund younger researchers, or researching why so much Nobel-winning work got rejected in its early days, or measuring the rate of “small crappy trials” (an important metric to track, if NIH hopes to improve here).

Now, the last item (on the Scientific Management Review Board) is worth watching. NIH has already been ignoring federal law for the past 9 years (i.e., the NIH Reform Act of 2006 created an advisory board that is supposed to report to Congress at least once every seven years on NIH’s performance and structure, yet it hasn’t done anything at all since 2015). Why would NIH obey mere appropriations language if it hasn’t been obeying the law? Maybe because appropriators (who hand out money) are now going to be watching.

The Cassidy Report

A while back, Senator Cassidy released a thoughtful list of questions about how to improve NIH. The Good Science Project (along with many others) submitted a response.

In May, Senator Cassidy’s office released a new white paper: “NIH in the 21st Century: Ensuring Transparency and American Biomedical Leadership.” It has many productive metascience ideas for how to improve NIH (and all of science for that matter).

Some highlights:

* Cassidy notes (with an admirable degree of self-awareness) that Congress probably micromanages NIH too much by tying appropriations to top-down mandates in particular areas:

Another challenge within NIH’s research portfolio is balancing the need to direct research toward specific topics of significant public health interest (referred to as “targeted research”) with investigator-initiated research. In FY23, targeted research comprised 12 percent of all new research project grants made by NIH, a total of 20 percent of all new research project grant funding awarded. This targeted funding ties back to more than 60 discrete funding levels specified by Congress in the FY23 annual appropriations report language.

On the one hand, direction from Congress and NIH senior leadership can be beneficial to focus the research community on high-impact areas of research and ensure NIH is appropriately prioritizing work to address diseases of significant unmet need. . . . However, too much direction can warp the portfolio as a whole, dissuading investigators from pursuing other research topics that could have broad benefit and to create a naturally diversified portfolio. Further analysis of NIH’s internal data could help the research community, policymakers, and stakeholders better understand how to appropriately balance these competing interests.

Exactly. Top-down mandates from Congress are rarely effective when it comes to science—the universe doesn’t easily bend to the title of congressional initiatives. Look at how much money has been plowed into the NIH’s initiative on Alzheimer’s whose website still aims to “prevent and effectively treat Alzheimer’s and related dementias by 2025,” which obviously isn’t going to happen.

Most of the greatest breakthroughs come when scientists explore areas at the boundary between two fields, or when they delve into areas that seem irrelevant. Congress would probably have a better chance of solving Alzheimer’s by putting money into botanical explorations throughout the world, or by funding studies on naked mole rats or elephants, rather than pouring money into another “Alzheimer’s Initiative.”

* Cassidy points out that NIH probably engages in a number of redundant activities, yet there isn’t a good way of figuring that out:

Reducing redundancy could improve the balance of NIH’s portfolio by making more resources available within NIH’s existing budget. Under statute, the NIH director is responsible for classifying and publicly reporting NIH-funded research projects and conducting priority-setting reviews for the agency. However, it is unclear what steps current and former directors have taken to formally conduct these reviews and whether analysis of NIH’s internal and public-facing data contributes to the reviews. These reviews and corresponding analyses should provide a unique opportunity for the NIH director to identify areas of research duplication and potential collaboration between ICs.

One area to focus on here might be data repositories and the like. NIH currently supports at least 147 data repositories. Phil Bourne once told me that he suspected NIH was wasting $1 billion a year on duplicative data resources, because NIH is so decentralized and each Institute/Center prefers to spend its money internally. Congress should dig further into this question.

* Cassidy correctly notes that we lack good metascience on NIH’s decisionmaking, and that outside analysts need better access to NIH’s internal data:

Learning from Scientific Successes and Failures

Other untapped resources include data NIH and its extramural partners possess on applications for funding and the outcomes of funded projects. NIH currently publishes data on which projects and researchers receive funding (commonly referred to as the “success rate”). However, more granular data about how specific proposals fare through the peer review process and are ultimately selected or rejected for funding are not available. Several respondents to my RFI stated that access to this data would enable researchers to conduct metascience research on the scientific process. NIH’s Office of Portfolio Analysis has supported some metascience initiatives by aggregating information related to citations of published papers and contributing to the development of best practices in the field. Piloting a process for the secure sharing of NIH application and review data with trusted researchers would help identify or validate trends within NIH processes and recommend process improvements.

Right on! There are far too many accomplished researchers who are perennially frustrated at the difficulty of getting access to NIH’s internal data or getting permission to publish their results.

There is no reason for NIH to be so stingy about sharing data with outside researchers, even including the National Academies! See this quote from a National Academies report commissioned by Congress on NIH’s small business program: “Although the committee requested priority score information from NIH, this information was not provided because of confidentiality concerns. If future analyses are to be more robust and enable stronger statements on program impact, NIH will need to find a way to provide this information to researchers as it and other agencies have done in the past.”

Remarkable intransigence on NIH’s part here. There are many ways to protect confidentality while still allowing innovative research to occur—ask Census or Treasury.

* Cassidy notes that science benefits when everyone is free to publish null or negative results, but that doesn’t happen nearly often enough:

Researchers also often lack insight when experiments produce null or inconclusive results (collectively referred to as “negative results”) because these findings are typically not published in academic journals. Yet, negative results can themselves advance lines of scientific inquiry, such as questioning or ultimately refuting a hypothesis, pointing investigators in more fruitful directions, or identifying flaws in a methodology. While some peer-reviewed journals do accept negative results for publication, the direct expenses and opportunity costs to the researcher associated with preparing an article, along with any perceived reputational costs associated with an unsuccessful experiment, may serve as a disincentive to publish these results. NIH should consider strategies to encourage the voluntary sharing of negative results, such as the establishment of a negative results repository within the National Library of Medicine.

Not only should NIH do this, it should go further, and pilot a requirement that any principal investigator on an NIH grant must write up at least 5 pages per year per grant on one or more failures or null results, and must share that document on a public server such as bioRxiv or OSF. NIH could also do random audits of researchers who have never reported negative/null results in the past.

Anyone who has no negative or null results to report may well be engaged in academic fraud (no one, not even Einstein, is so perfect that they never have a null result to report). I’ve argued elsewhere that sharing null/negative results might be the single best way to improve both innovation and reproducibility.

* Cassidy correctly notes that NIH’s peer review process can be biased against truly innovative research, which will often seem too risky or too outside-the-box for peer review committees.

Incentivizing Innovation

Respondents to my RFI noted that NIH’s extramural research programs tend to reward incremental science, rather than high-risk, but potentially transformational studies and identified the current approach to peer review as a driver of incrementalism.

Peer review is foundational to the NIH model, and peer reviewers provide an invaluable service to the research enterprise by volunteering their time and expertise. However, some respondents noted that the heavy involvement of subject matter experts—rather than “generalists” who are not as embedded in a particular area of research—can bias study sections toward the approaches and methodologies favored by such experts.

Respondents also noted that this phenomenon leads peer review committees to focus heavily on the mechanics of the proposed methodology and likelihood of success, rather than the overall scientific vision and potential impact of the proposal.

The structure of funding applications lend themselves to such a discussion during peer review: R01 applications consist of a one-page statement of objectives and a 12-page research strategy, which includes discussion of proposed methodology and preliminary results.

As a result, respondents noted that researchers face significant pressure to demonstrate proof of concept through robust preliminary data prior to applying for funding. Respondents also noted inconsistencies between peer reviewers, review cycles, and situations when peer reviewers turn over mid-review. In some cases, researchers might even tailor their application to target specific study sections that may be more receptive to their proposal.

To address these myriad challenges, NIH should pilot and evaluate multiple approaches to changing the application and peer review process, including staffing committees with more generalists, streamlining application discussion of methodology and preliminary results, regularly reviewing the focus and members of study sections to promote alignment with current science, and improving training of peer reviewers to make reviews more consistent.

Yes, yes, and yes. We need more experiments with peer review. NIH should follow NSF’s example in this regard, and should partner with multiple outside evaluators and researchers to conduct experiments on peer review innovations.

* As to NIH’s SBIR and STTR programs, Cassidy proposed a number of reforms to make those programs more successful:

NIH’s Small Business Innovation Research (SBIR) and Small Business Technology Transfer (STTR) programs also demonstrate room for administrative improvement to better achieve program goals. Respondents specifically cited as barriers NIH’s longer window to make awards compared to other Federal departments that operate their own SBIR and STTR programs. For example, the Department of Defense takes three months, on average, to notify successful applicants of an award, compared to NIH’s six and a half months. NIH also uses similar program management and peer review structures for its SBIR and STTR programs to those described above for other extramural research.

A recent National Academies of Sciences, Engineering, and Medicine review recommended that Congress and NIH explore piloting ways to make NIH SBIR and STTR programs more timely and responsive to the needs of small businesses. The John S. McCain National Defense Authorization Act for Fiscal Year 2019 directed the Department of Defense to establish a similar pilot program to speed up its SBIR and STTR review and awards process.

Some respondents to my RFI recommended NIH take a more venture capital-style approach to its administration of the program by exempting from, or dramatically reworking, the peer review process for SBIR and STTR to speed application review timeframes and hiring individuals with venture capital or other business expertise to serve as program officers.

All of this is exactly what the Good Science Project proposed, and we obviously agree. In keeping with the National Academies’ report on this issue, NIH should completely reorient its peer review process here.

* Cassidy proposed (also echoing the Good Science Project) that NIH should try out employing program officers with a term limit who rotate in and out (like NSF).

Program officers also play a key role in NIH’s extramural research prioritization and funding decisions. As staff scientists responsible for managing grant portfolios, program officers advise prospective applicants on the relevance of their proposed projects, develop requests for proposals and targeted research opportunities, and provide expertise to the agency. NIH program officers are typically permanent employees of the agency who develop specific areas of interest and expertise over time. Respondents pointed out that other agencies, such as the NSF, employ temporary program officers through “rotator” programs. Similarly, advanced research projects agencies across the federal government limit the term of their program managers to only a few years. These time-limited appointments enable more individuals to work within the agency, inject the agency with fresh ideas and up-to-date scientific knowledge, and take their understanding of the agency and its priorities back to their research positions following the conclusion of their rotation. NIH should pilot a rotator approach to fill program officer positions and related key roles.

The Good Science Project obviously agrees with the ideas here, but we’d also add that it may make sense to start with pilot experiments: e.g., NIH could engage in a randomized study to impose term limits on some study sections and SROs but not others, and then examine the results after a few years.

* Cassidy as much as cited the Good Science Project’s comments as to soft money, in that it is a conflict of interest like no other: Scientists need to do whatever funders say, in the interest of not losing their job, lab, or salary.

Respondents cited institutions’ use of “soft money”—reliance upon NIH funding to reimburse themselves, in part or in whole, for researchers’ salaries. One respondent referred to this practice as a rarely discussed but glaring conflict of interest [exactly our language]: if researchers are dependent upon successfully securing NIH grants in order to retain their jobs, they are likely to avoid taking risks in the projects they propose for NIH funding. This practice also has a disproportionately negative effect on early-stage investigators, who typically have lower success rates than established investigators (see Figure 2).

What to do about this? A tough question. Cassidy’s white paper concluded this section with the following recommendation:

Taken together, these observations demonstrate severe structural problems in how government and academia together finance biomedical researchers. Some respondents recommended increasing the amount of a single NIH grant that can be used for salaries. On the other hand, the Next Generation Researchers Initiative Working Group previously explored lowering this amount to reduce institutions’ reliance on NIH funding for salaries. Congress and NIH should explore this problem in further detail and identify policy options that ensure NIH and institutions each provide appropriate degrees of support for federally funded academic researchers.

Bruce Alberts (former National Academies president and former Science editor) already proposed a partial solution: the NIH could “require that at least half of the salary of each principal investigator be paid by his or her institution, phasing in this requirement gradually over the next decade,” or that “the maximum amount of money that the NIH contributes to the salary of research faculty (its salary cap) could be sharply reduced over time, and/or an overhead cost penalty could be introduced in proportion to an institution’s fraction of soft-money positions (replacing the overhead cost bonus that currently exists).”

Soft money is a scourge on biomedical research. We need to figure out how to limit its scope.

* An idea that the Good Science Project has promoted for 2 years is that NIH should reconstitute the Scientific Management Review Board, as established by the NIH Reform Act of 2006 in order to advise Congress on NIH’s performance and structure.

Cassidy agreed:

Rebuilding public trust in NIH will require improving meaningful transparency and public discourse about NIH operations. While NIH is a highly visible agency, members of Congress have expressed concern about a lack of engagement with congressional oversight requests related to the COVID-19 pandemic response. Similarly, respondents noted instances of the agency deprioritizing statutory requirements, such as the lack of engagement of the Science Management Review Board (SMRB). Established under the NIH Reform Act, the SMRB is tasked with reviewing NIH’s structure and making recommendations every seven years. However, NIH has not convened the SMRB since 2015, and one of its only recommendations prior to that time—the consolidation of two ICs with similar research focuses—was disregarded by agency leadership. As an initial step to improving transparency into NIH operations, Congress should reconstitute the SMRB and incorporate the perspectives of individuals from outside the scientific community to inform SMRB recommendations to the director.

* The Good Science Project commented on the need to validate research results, as shown by numerous cases of fraud in the Alzheimer’s field. Cassidy’s memo agreed:

Research misconduct is another major issue facing NIH. Recent high-profile cases of research misconduct, specifically within Alzheimer’s research, and the potential applications of artificial intelligence to data fabrication and falsification raise questions about how NIH can protect the integrity of its research investments. HHS’ Office of Research Integrity (ORI) is responsible for developing research misconduct policies and conducting investigations on behalf of NIH and other HHS public health agencies. Last fall, ORI proposed updates to its research misconduct regulations for the first time since 2005. The office has a small footprint relative to the volume of NIH-funded research and does not have independent investigative authorities. A recent editorial in Science noted that NSF’s Office of the Inspector General (OIG) tends to identify more instances of research misconduct than ORI, despite NIH having a significantly larger extramural research portfolio. Given these factors, ORI’s ability to proactively identify or prevent research misconduct within the NIH-funded research portfolio is likely limited.

Within NIH’s authorities, the agency recently issued a data sharing policy that will enable researchers to more easily validate or refute claims.41 New technologies could also play a role in helping to quickly identify inconsistencies in research claims. For example, the Defense Advanced Research Projects Agency (DARPA) previously carried out the Systematizing Confidence in Open Research and Evidence (SCORE) program, which demonstrated the feasibility of using algorithms to validate claims. Congress and NIH should identify opportunities to leverage these types of technologies, coupled with data sharing policies, to restore public confidence in research claims. Congress should also review the statutory authorities of HHS’ ORI to identify any areas that could be strengthened, clarified, or updated to reflect current research misconduct challenges. This review should also take into consideration the complementary role of the Department of Health and Human Services’ (HHS) OIG.

As we’ve written before, NIH should proactively fund efforts at both replication and fraud detection. Using algorithms to detect research problems is a promising avenue to explore further.

In short, hats off to Sen. Cassidy and his staff on the HELP Committee who put this thoughtful and thorough memo together.

The House Report

Even more recently, Rep. Cathy McMorris Rodgers (current chair of the House Energy & Commerce Committee, which oversees NIH) and Rep. Robert Aderholt (chairman of the Labor, Health and Human Services, and Education Appropriations Subcommittee) wrote a STAT piece arguing for NIH “reform and restructuring,” along with the release of a white paper going into further details.

A good deal of their white paper focuses on biosecurity. I won’t address that subject in detail, because I don’t have much to add to what others have already said.

Instead, I’ll focus on other topics, some of which may be controversial but are still worth discussion by policymakers.

Reorganization and Streamlining NIH

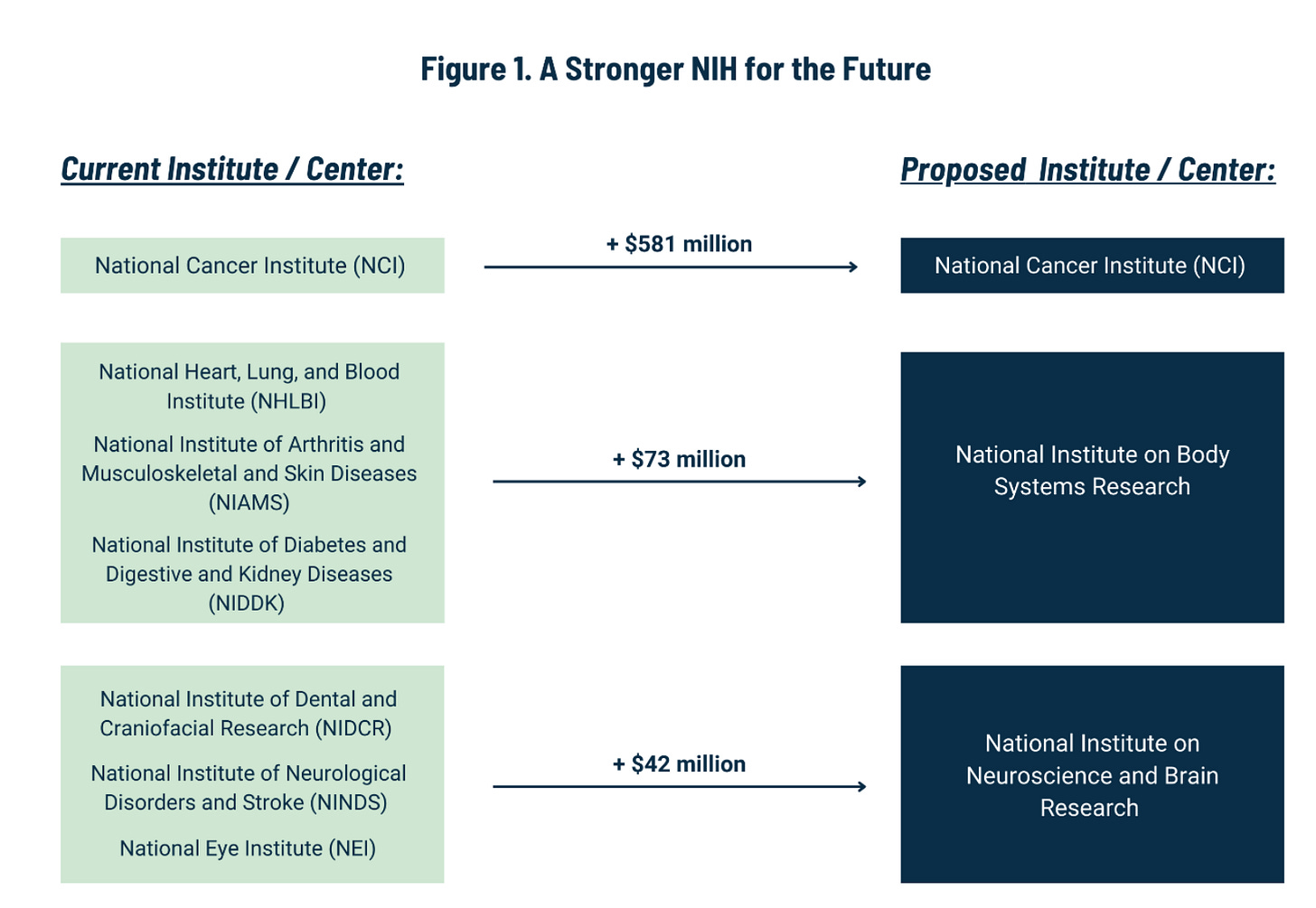

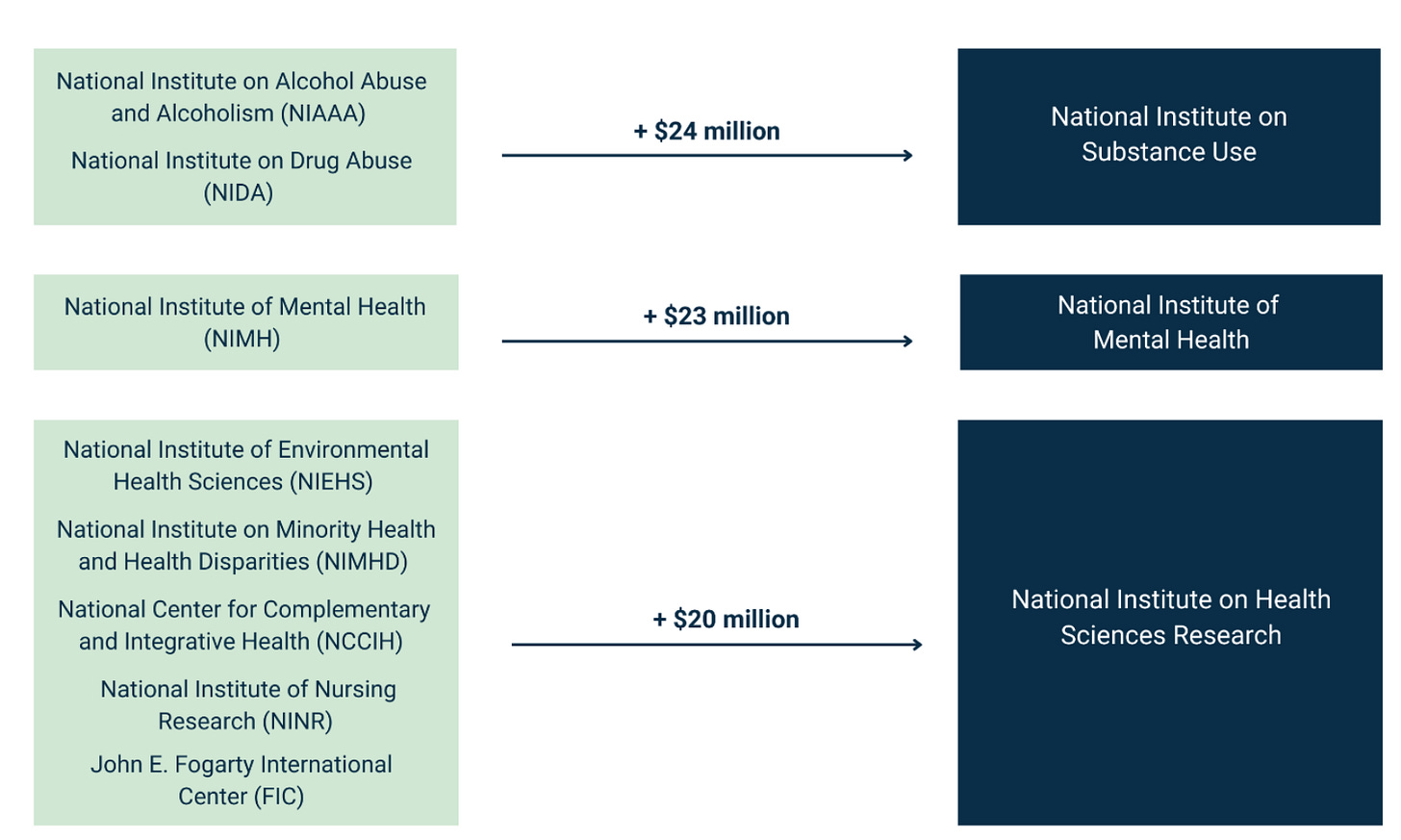

The white paper has an ambitious plan to reduce the number of ICs from 27 to 15. It includes the following chart:

Before getting into the details, I’ll just say that this is exactly the policy conversation that NIH directors have wanted since the 1990s (if not further back). Harold Varmus wrote in 2001 about the “proliferation” of institutes at NIH. Later in his memoir (which is well worth reading in its entirety), he went into greater detail:

Anyone who looks at an organizational chart of the NIH will be immediately struck by its complexity, especially by the multitude of institutes and centers, now twenty-seven, then twenty-four, each with its own authorities, leaders, and (nearly always) appropriated funds.

This, of course, is not the way the agency was initially designed. The individual components have been created over the past seventy years, in a fashion that reflects one of the most appealing things about the NIH—its supporters’ passionate loyalty to the idea of using science to control disease. Each of the centers and institutes was legislated into being by members of Congress, commonly working together with citizen advocates, who believe that some aspect of biomedical research—a specific disease (like cancer or arthritis), a specific organ (like the heart or lung), a time of life (like aging or childhood), or a discipline (like nursing or bioengineering)—can benefit from the creation of a unit of the NIH devoted to it.

But the resulting proliferation of institutes and centers has not been healthy in every way. It has doubtless helped to drive budgetary growth for the NIH as a whole, but it has also created administrative redundancies. (Some of these were partly reversed during my time at the agency by the creation of inter-institute administrative centers.) The multitude of institutes has helped to focus attention on many important diseases and conditions and to sustain public advocacy for the NIH. At the same time, it has placed some topics at a disadvantage, because they are overseen by relatively small institutes that may lack the capacity to conduct large-scale efforts, like major clinical trials. Most plainly, the diversity of institutes complicates the central management of the NIH by the Director.

. . .

During my final year at the NIH, I expressed my anxieties about the continuing proliferation of autonomous units at the NIH in public talks and extended the discussion in an essay published in Science magazine shortly after I left the agency. In these presentations, I argued that, unless proliferation was stopped, the NIH would become an unmanageable collection of over fifty units within a decade or two at its current rate of growth. I then took the argument a step further, and proposed that the current organization of the NIH be reconsidered and redesigned, creating six centers of approximately equal size, including one for the director, giving him or her increased authority over coordinated programs, new initiatives, and budgets.

Elias Zerhouni told me that the reason for the Scientific Management Review Board in the NIH Reform Act of 2006 is that he wanted a place to have that bigger-picture discussion about NIH’s overall structure, portfolio, and future direction.

Regardless of the merits of any actual proposal, the very fact that top policymakers are thinking about the big picture is a step forward.

On the merits of the white paper’s plan to merge various ICs, I do have a few quibbles.

ARPA-H

ARPA-H shouldn't be lumped together with several other NIH entities. The whole point of ARPA-H was to do something different without the usual NIH bureaucracy, and various congressional leaders already went through a long back-and-forth about whether ARPA-H could be affiliated with NIH at all.

The compromise position (in section 2331 here) was that ARPA-H couldn’t employ anyone who worked for NIH in the prior 3 years, that ARPA-H wasn’t allowed to be on the NIH campus but had to create offices in “not less than 3 geographic areas,” and more.

There’s no reason to backtrack on all of these structural provisions that keep ARPA-H relatively independent.

Combining ICs: More Discussion Needed

It makes sense to combine certain ICs (like alcoholism and drug abuse, even though that idea got shot down last time around). But other proposed combinations might need more analysis. For example, why would we combine dental research with eye research and neurological research? And in the bigger picture, why not eliminate certain ICs rather than combining them with others? Why not add, say, a National Institute of Nutrition, or a National Center for Data Resources (which could take over and streamline all of the many data repositories that NIH currently supports)?

In any event, combining and rearranging ICs might have a better shot if budgets were being increased, not tightened. In today’s environment, any legislation combining a bunch of ICs would likely cause literally every patient advocacy group, disease advocacy group, medical research lobby, etc., to descend on Congress to protest that their own issue might be shortchanged.

If budgets were increasing, however, it would be possible to tell such folks, “Don’t worry! We’re actually increasing the line item for your issue by $__ million dollars per year.” Then, it might be more feasible to make structural reforms.

Aging and Dementia

Turning the National Institute on Aging into a National Institute on Dementia is just truth-in-labeling: NIA has been overwhelmed with Alzheimer's research in the past several years. See this useful overview by Eli Dourado and Joanne Peng:

On the other hand, if we’re going to think about wholesale reorganization of NIH, why not put Alzheimer's and other dementias into the National Institute for Neurological (fill-in-the-blank)?

As Dourado and Peng argue, we need an actual National Institute on Aging—not one that is dominated by Alzheimer’s, but one that actually addresses a broad spectrum of aging-related questions in biology, including basic science on whether we can slow the pace of cellular aging, etc.

Peer Review at NIH

There’s a major structural issue that is unaddressed in the white paper: Why should the Center for Scientific Review have a monopoly on conducting peer review for the overwhelming majority of what NIH funds? NIH should be experimenting with different approaches to peer review, like the National Science Foundation is doing (see here).

If NSF had the same degree of centralized control over peer review, its TIP Directorate might not have been able to announce a partnership to test new ideas for how peer review should work. Centralization and bureaucracy likely create too much uniformity and "lowest common denominator" thinking in science. In the mid-20th century, it would have been a bad idea if Bell Labs and Xerox PARC and the Laboratory of Molecular Biology at Cambridge all had to seek funding that was subject to the same centralized source of peer review.

That said, the Center for Scientific Review could take the initiative to launch a series of ambitious experiments on different models of peer review, and could even do cluster randomized experiments across its hundreds of peer review panels (i.e., study sections). By doing so, CSR would demonstrate that it is keeping up with NSF in terms of innovation and metascience.

Term Limits

I’ve heard policymakers ask about NIH term limits for the past 2 years, and the white paper makes a recommendation: “limit every IC Director to a five-year term, with the ability to serve two, consecutive terms, if approved by the NIH Director.”

The white paper’s rationale for term limits is this:

The lack of turnover within NIH’s leadership may contribute to an inability to adapt to evolving expectations in the workplace or to proactively change an existing workplace culture. Investigations conducted by the Committee on Energy and Commerce have raised concerns about the adequacy of the NIH’s response to allegations of misconduct, including sexual harassment complaints, within the NIH and at grantee institutions.

Responsiveness to sexual harassment is an important issue, to be sure, but I don’t see why it’s the only rationale given for adopting term limits for IC Directors. Other rationales might include: 1) the need to improve innovation by bringing in new people with new ideas, and 2) the fact that no one person (no matter how brilliant) should be allowed to dominate an entire area of funding (such as Alzheimer’s) for a generation.

In any event, it’s probably a good idea. I’ve seen no evidence that NIH is improved by allowing IC Directors to stay in place for 3+ decades.

Indeed, perhaps we should think about term limits for lower-level employees, as is the case at DARPA. The nice thing about NIH is that it’s big enough that we could literally do a randomized experiment that sets term limits for some program officers but not others. It might take a long time to see the results, but that time is going to pass anyway—we might as well set ourselves up to learn something in the meantime.

Regular NIH Review

The white paper recommends a new “congressionally mandated commission to lead a comprehensive, wholesale review of the NIH’s performance, mission, objectives, and programs. Such review should include regular, timely public reports and updates and conclude with clear, actionable recommendations for improvement. The commission should include a sunset to require Congress to revisit the recommendations and subsequent implementation, to avoid a similar outcome as the SMRB.”

This is all a good idea, but it’s not clear to me what we gain from creating a new commission as opposed to just demanding that NIH comply with the 2006 law that mandated the SMRB (Scientific Management Review Board) with a similar mission. If NIH didn’t bother to obey the 2006 law, why would adding a sunset requirement make a difference?

Indirect Costs

The white paper makes several suggestions for dealing with indirect costs which can often be 60-70% and sometimes over 100%:

Consider alternative mechanisms to limit indirect, or F&A, costs, such as tying the indirect cost rate to a specific percentage of the total grant award, either universally or for certain designated entities; capping indirect costs at a graduated rate dependent on a recipient’s overall NIH funding; or providing incentives or preferences to recipients with established and proven lower indirect costs.

As is, this proposal would likely face a tough battle, akin to the Trump administration’s idea of limiting indirect costs to 10%. Yes, indirect costs were limited to “8% in 1950, then revised to 15% in 1958, and then raised to 20% in 1963,” so it is clearly possible for a world to exist in which universities function with indirect costs at that level. But you can’t unring a bell: Now that universities have expanded over the past 60 years and have come to depend on indirect cost reimbursement, it’s tough to unwind investments made in buildings, etc.

At the same time, there may be a win-win scenario here.

Much of the increase in indirect costs over the past 60 years is due to federal regulation and bureaucratic requirements.

See this chart from COGR, which only goes back to 1991!

What if we came to an overall agreement: Limit indirect cost reimbursement to ___% on federal grants, but at the same time, Congress/OMB/etc. would agree to reduce the bureaucratic requirements to 20 rather than 200. We’d have to make some tough choices as to which regulations to eliminate, but ultimately we might be better off.

Grant Limitations

The white paper makes the following recommendation: “Grant Recipients Must Remain Dynamic—focus on providing grants and awards only to primary investigators that do not have more than three ongoing concurrent NIH engagements.”

I’m sympathetic to this idea, but ultimately it would likely meet the same fate as the Grant Support Index (3 grant) idea that was floated by NIH in 2017, and then abandoned within a month due to the fierce opposition. One scientist told the Boston Globe, “If you have a sports team, you want Tom Brady on the field every time. You don’t want the second string or the third string.”

Regardless of the arguments for limiting NIH grants to 3 per investigator, the problem is this: The idea amounts to taking away money from the most politically powerful researchers and universities in the US. Such a proposal has no more chance of success than “defund the police.”

It would be politically more palatable to focus on increasing funding to areas that are underfunded—i.e., more funding for younger investigators, more funding for people with an outside-the-box idea, etc. Similarly, while “defund the police” was a massive failure, the same general idea has been more successful when folks focus not on taking away money from the police, but on allocating more funding to mental health or homeless shelters.

Conclusion

In the grand scheme of things, it is heartening to see so much attention being paid to important issues about how NIH is organized. Hopefully we’ll see more discussions like this in the next year.

My view of the problem with targeted research funding is that it inevitably gets captured by existing interests. Without some outside force causing budgets to increase, they naturally decrease as political pressure leads to belt-tightening and civil servants are rewarded for "saving money" (an extremely shortsighted description, since research funding has one of the best long-term returns on investment) rather than enabling new ideas. Therefore, when the only increases are coming from targeted initiatives, PIs figure out how to rephrase their current research to match the targets. Basically, it's just a waste of everyone's time to do things this way. J. Storrs Hall's book "Where is My Flying Car?" discusses how this happened to the National Nanotechnology Initiative from 2000 (you'll notice that 25 years later, we still don't have nanotechnology), and the same thing is happening now for AI, quantum, etc.

The solution is simple: a dollar for dollar match between targeted funding and general unrestricted research funding. But simple doesn't mean easy: doing this would require discipline and consistency from both funding agencies and Congress, and those are not in abundant supply.